Data smoothing is a form of low pass filtering, which means that it blocks out the high frequency components (short wiggles) in order to emphasis the low frequency ones (longer trends).

There are two popular forms; (a) the running mean (or moving average) and (b) the exponentially weighted average. They are both implemented by means of efficient recursive formulae:

or from an imaging processing website

Smoothing is a process by which data points are averaged with their neighbours in a series, such as a time series, or image. This (usually) has the effect of blurring the sharp edges in the smoothed data. Smoothing is sometimes referred to as filtering, because smoothing has the effect of suppressing high frequency signal and enhancing low frequency signal. There are many different methods of smoothing...blah blah - need to rewrite.

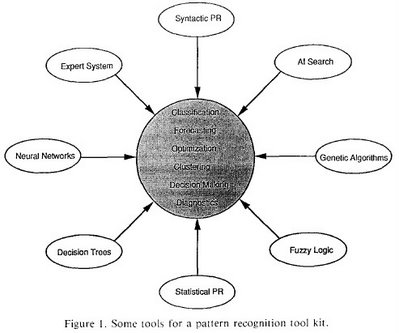

What I am doing is using heuristic technique

i am only filtering out very low frequency, very high numerical values to eliminate bias due to large numerical values in the set.